2 min read

Read more about Coxwave's journey to cutting deployment times by 85% and building AI-native products faster with Vercel

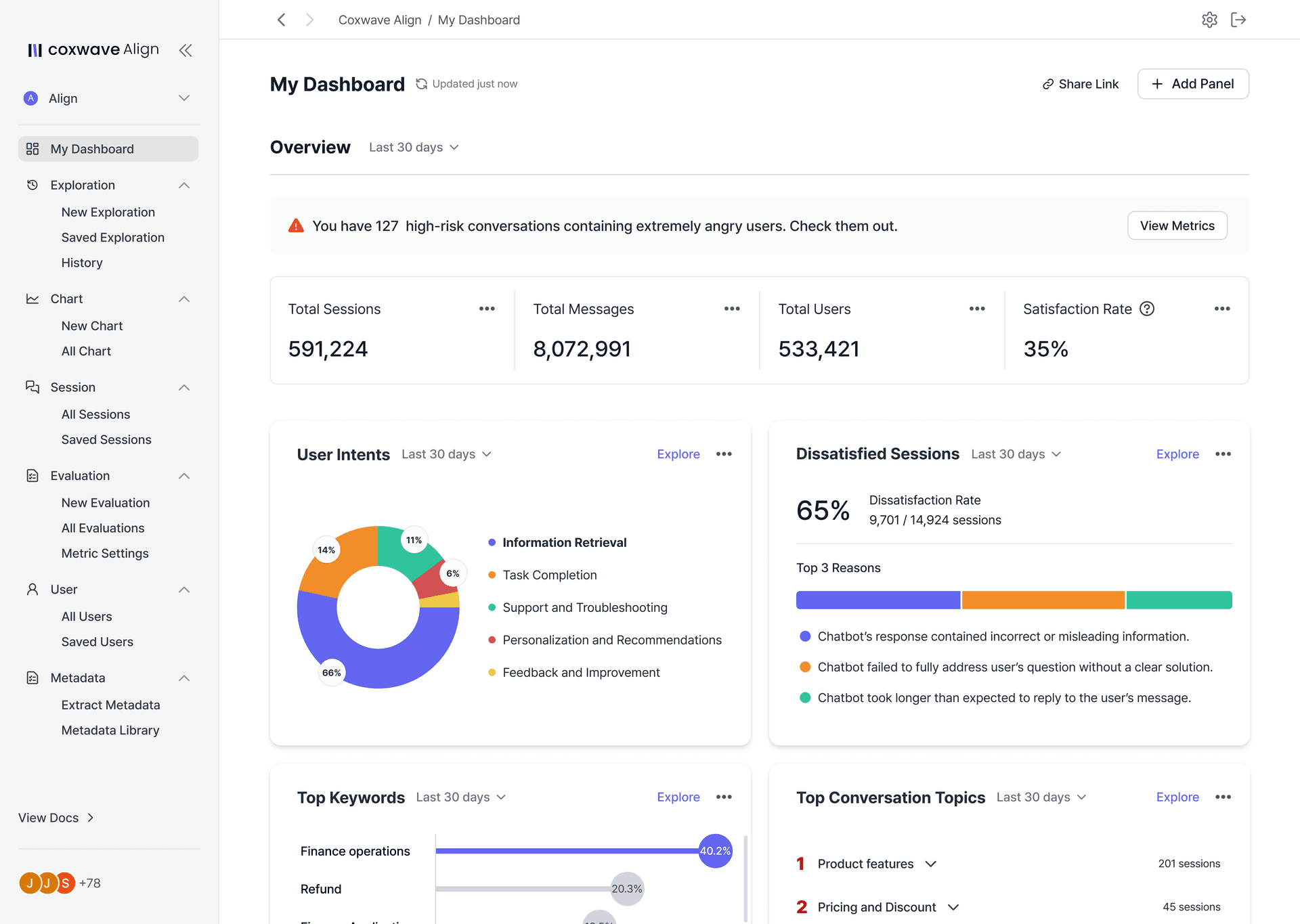

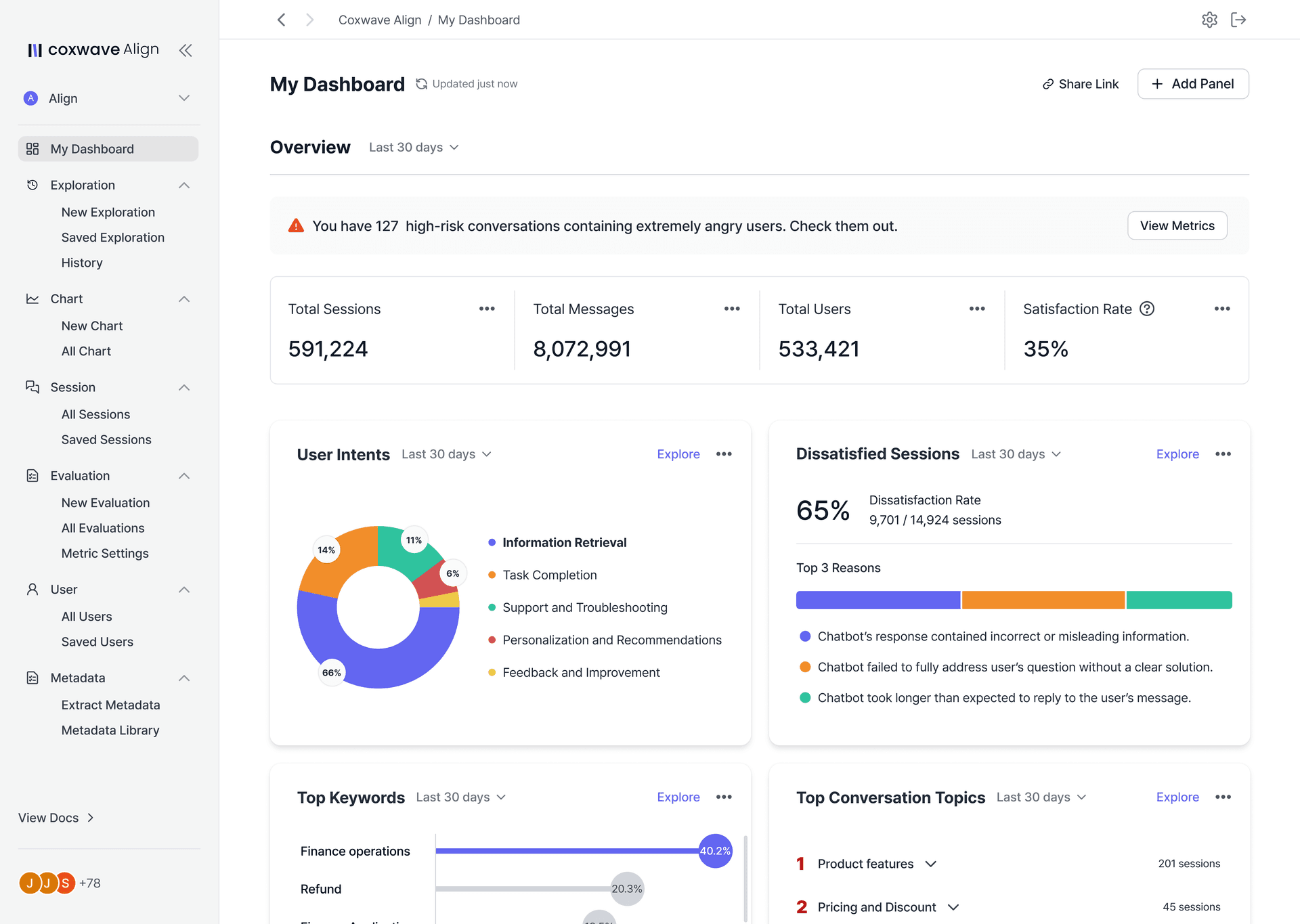

Coxwave helps enterprises build GenAI products that work at scale. With their consulting arm, AX, and their analytics platform, Align, they support some of the world’s most technically sophisticated companies, including Anthropic, Meta, Microsoft, and PwC.

Since the company’s founding in 2021, speed has been a defining trait. But speed doesn’t just mean fast models. For Coxwave, it means fast iteration, fast validation, and fast value delivery.

To meet that bar, Coxwave reimagined their web app strategy with Next.js and Vercel.

Link to headingFrom monthly releases to multiple deploys a day

Coxwave’s most pressing challenge is delivering value fast in a constantly shifting market. Traditional deployment cycles and infrastructure setups couldn't keep up.

By choosing the Next.js stack and deploying on Vercel, they created an environment that can keep up with the innovative pace of GenAI.

Vercel’s Preview Deployments, built-in CDN, and automatic scaling allow Coxwave to ship confidently and react in real time. Feature testing happens in production-like environments without disrupting live traffic. Hotfixes can be pushed the same day and new experiments can go live within hours.

The results are concrete:

Deployment times were reduced by 85%

Production deploy frequency jumped from once a week to twice per day

Experiment-to-feedback cycles shrank dramatically

52% decrease in production recovery time with Instant Rollback

In GenAI, speed and stability are non-negotiable. Vercel’s Preview Environments and rapid deploys let us test in production-like settings and ship features faster, helping us deliver real value to our analytics customers.

For Coxwave, these improvements weren’t just technical. They gave the team room to think, validate, and adapt faster than their competitors locked into slower infrastructure.

Link to headingAI-native features, tested in real time

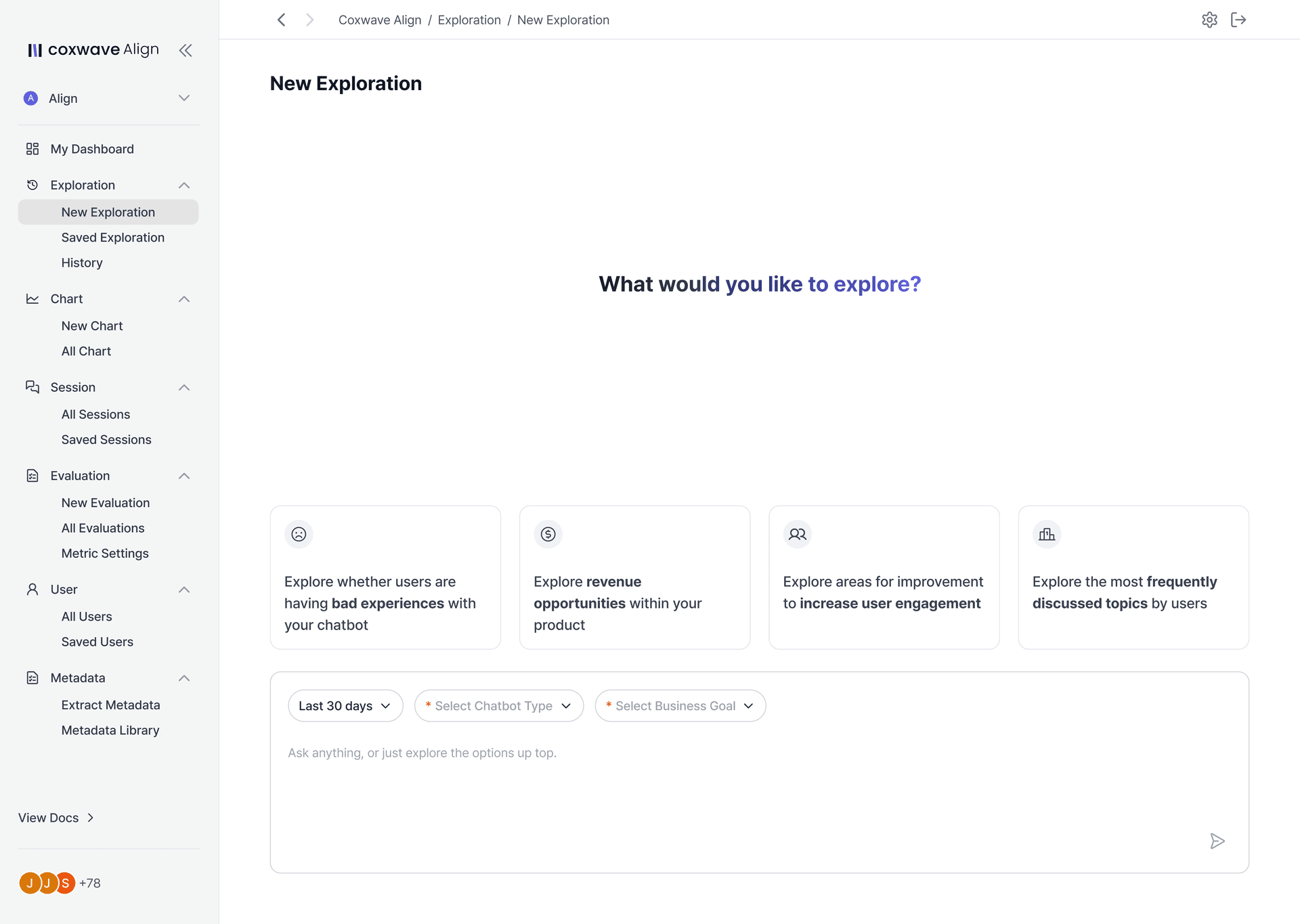

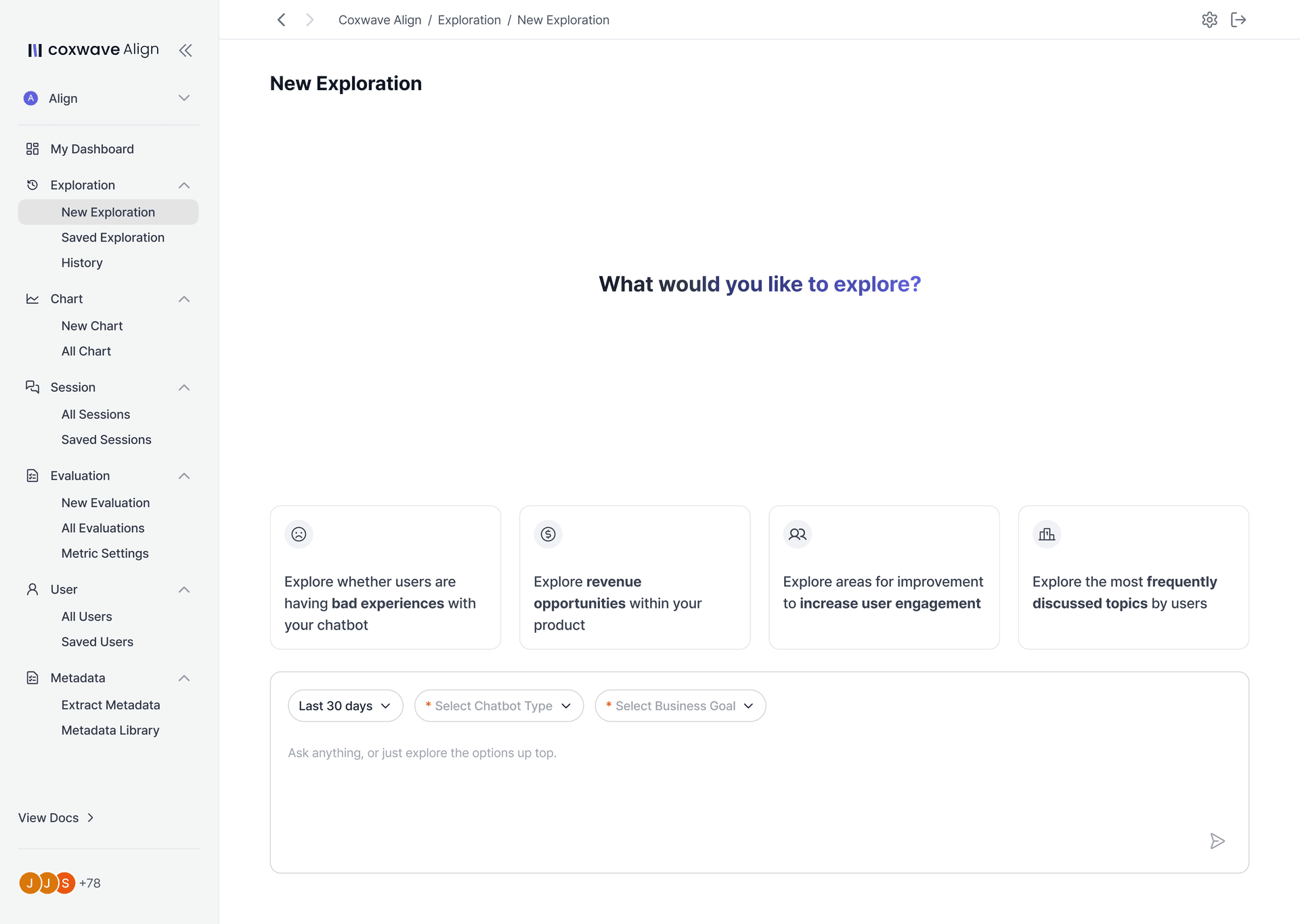

When Coxwave launched Exploration Copilot, their AI assistant for product teams, Vercel made it possible to prototype and release at full speed.

Preview Environments let the team build and test multiple LLM-powered variations in parallel, without any risk to production.

In parallel, the AI SDK, with features like the useChat hook, gave the team a fast starting point for the conversational functionality, handling streaming, state, and UI updates out of the box, so they could skip boilerplate and get to user feedback faster.

Unlock rapid AI development

Build AI-powered applications with the AI SDK.

Get started with the AI SDK

These tools combined to accelerate their entire cycle: from idea, to prototype, to production-ready product.

Link to headingBuilding without infrastructure drag

Before Vercel, Coxwave’s Next.js apps were deployed using CloudFront + S3. That worked for static sites but not for the dynamic, server-rendered needs of modern GenAI apps. Every environment setup required additional overhead and deploying fixes was slow and brittle.

By default, Coxwave now gets consistency between dev and production, simplified team ownership, and serverless compute without the operational load. The frontend team owns the full deployment lifecycle, and every branch gets its own environment for testing.

Link to headingShip like Coxwave

Coxwave plans to continue use of the Vercel and Next.js stack across upcoming projects. The setup has proven its value not just for development velocity, but for customer experience and product confidence.

Take the first step towards modernizing your tech stack

Talk to an expert to learn how you can make your website faster and more reliable on Vercel.

Contact Sales