4 min read

Faster, modern, and more scalable

We’ve been building a new foundation for compute, built on top of Vercel’s Managed Infrastructure, for the past year.

Today, we’d like to share our first major iteration of Vercel Functions:

Increased Concurrency: Now up to 100,000 concurrent invocations

Web Standard APIs: New Function signature using Web

RequestandResponseZero-Config Streaming: Stream responses with the full power of Node.js

Long Running Functions: Up to 5 minutes on Pro and 15 minutes on Enterprise

Faster Cold Starts: Both runtime and framework improvements

Automatic Regional Failover: Increased resiliency for Enterprise teams

Get started with Vercel Functions today or continue reading to learn more about how we’ve improved the foundations of our compute platform.

Link to headingIncreased Concurrency

Vercel Functions now have improved automatic concurrency scaling, with up to 30,000 functions running simultaneously for Pro and 100,000 for Enterprise with the option for extended concurrency.

This ensures your project can automatically scale even during the highest bursts of traffic. We’ve designed these improvements with our customers who experience irregular traffic patterns, like news and ecommerce sites.

These concurrency improvements are the default for Hobby and Pro, and Enterprise can have even higher concurrency. These improvements are available today with zero changes in your application code.

Link to headingWeb Standard API Signature

When we first released Vercel Functions, the Node.js request/response objects were the standard signature for creating API endpoints. This Express-like syntax inspired both the Next.js Pages Router as well as standalone functions on Vercel.

export default function handler(request, response) { res.status(200).json({ message: 'Hello Vercel!' });}The Node.js inspired signature for Vercel Functions

With the release of Node.js 18 and improvements to the Web platform, Node.js now supports the Web standard Request and Response APIs. To simplify Vercel Functions, we now support a unified function interface that accepts a Request and produces a Response:

export async function GET(request: Request) { const res = await fetch('https://api.vercel.app/blog', { ... } ) const data = await res.json()

return Response.json({ data })}

export async function POST(request: Request) { // Can also define other HTTP methods like PUT, PATCH, DELETE, HEAD, and OPTIONS}Vercel Functions now support a unified, isomorphic signature

This API interface is similar to Next.js Route Handlers. You can read cookies, headers, and other information from the incoming request using Web APIs. The Web API signature enables you to use MDN, ChatGPT, or other Large Language Models (LLMs) to easily create APIs based on a common standard.

export async function GET(request: Request) { // Read headers const token = await getToken(request.headers); // Set cookies return new Response('Hello Vercel!', { status: 200, headers: { 'Set-Cookie': `token=${token.value}` }, });}Learn the Web standard APIs and reuse your knowledge across frameworks

The existing APIs for Serverless and Edge Functions are still supported and do not require changes. To start using the new signature:

Ensure you are using Node.js 18 (default)

Ensure you are on the latest Vercel CLI version

Link to headingZero-Config Function Streaming

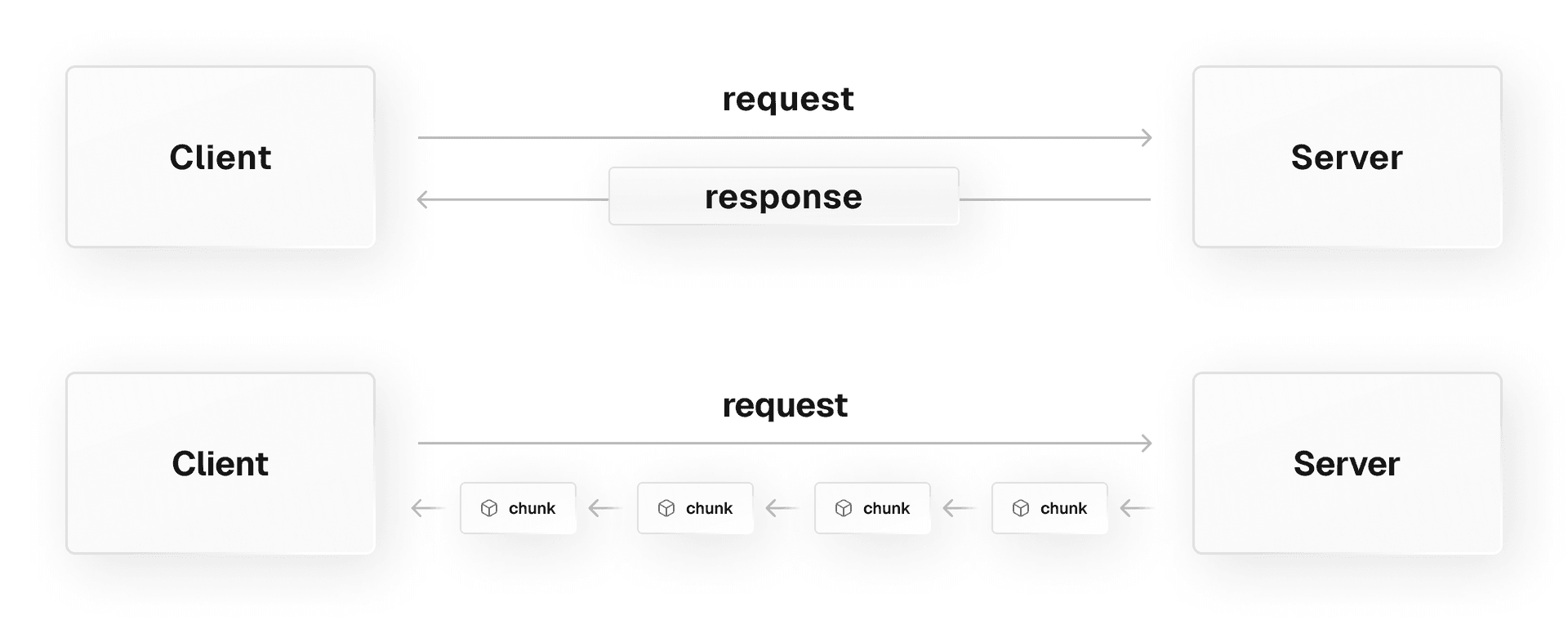

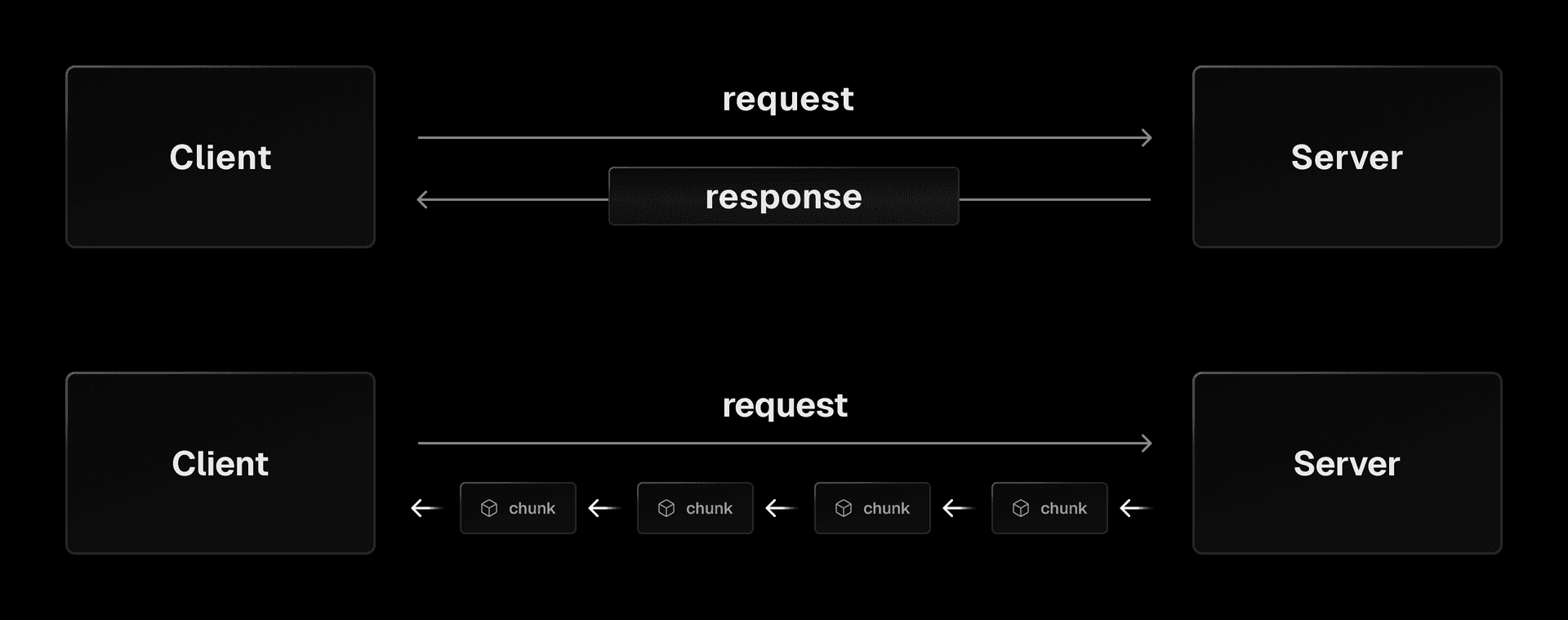

Earlier this year, we launched support for HTTP response streaming for Vercel Functions.

Support for streaming responses has enabled building chat interfaces on top of LLMs, improving initial page load performance by deferring slower or non-critical until after the first paint, and much more.

Vercel Functions support streaming for both Node.js and Edge runtimes with zero additional configuration. This includes the new Web API signature.

Learn more about streaming on the web, view our streaming documentation, or explore templates for streaming with frameworks like Next.js, SvelteKit, Remix, and more.

Link to headingLong Running Functions

Pro customers can now run Vercel Functions for up to 5 minutes.

export const maxDuration = 300; // 5 minutes

export async function GET(request: Request) { // ...}You can run functions for up to five minutes on Pro

We recently also improved the default function duration to prevent unintentional usage, as well as launched new spend management controls to receive SMS notifications or trigger webhooks when you pass a given spend amount on your function usage.

Further, with the launch of Vercel Cron Jobs, you can run any task on a schedule which calls your Vercel Function.

Learn more about configuring the maximum duration for Vercel Functions.

Link to headingFaster Cold Starts

Vercel Functions now have faster cold boots, with improvements to our Managed Infrastructure for all frameworks and open-source optimizations for Next.js.

The Next.js App Router bundles server code used for APIs and dynamic pages together. We’ve improved the heuristics for smart bundling of external dependencies and the server runtime, as well as reducing the total size of the server runtime.

When comparing Next.js 13 versus the latest Next.js 14 changes, we’ve seen twice as fast cold boot times as well as smaller function bundles.

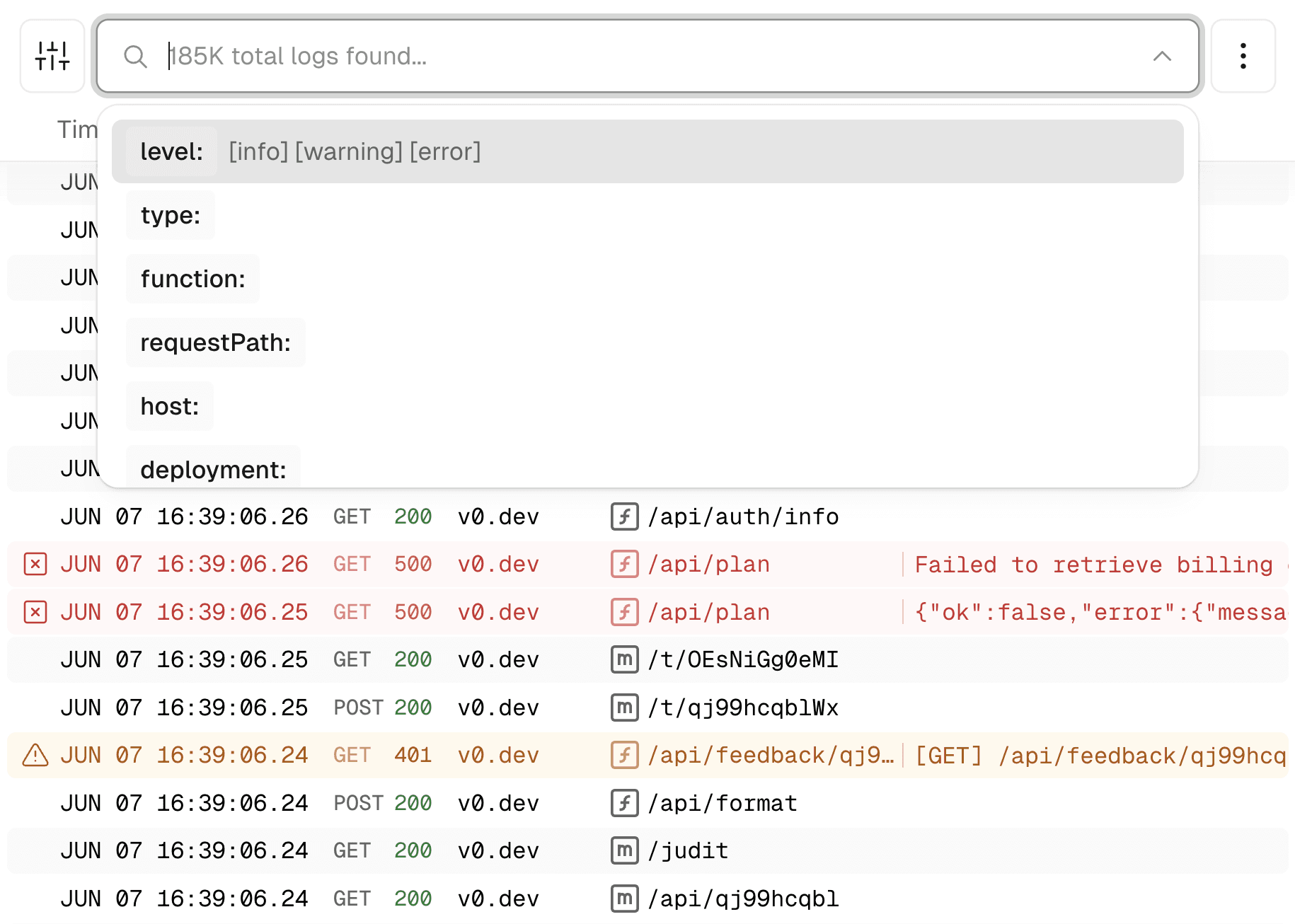

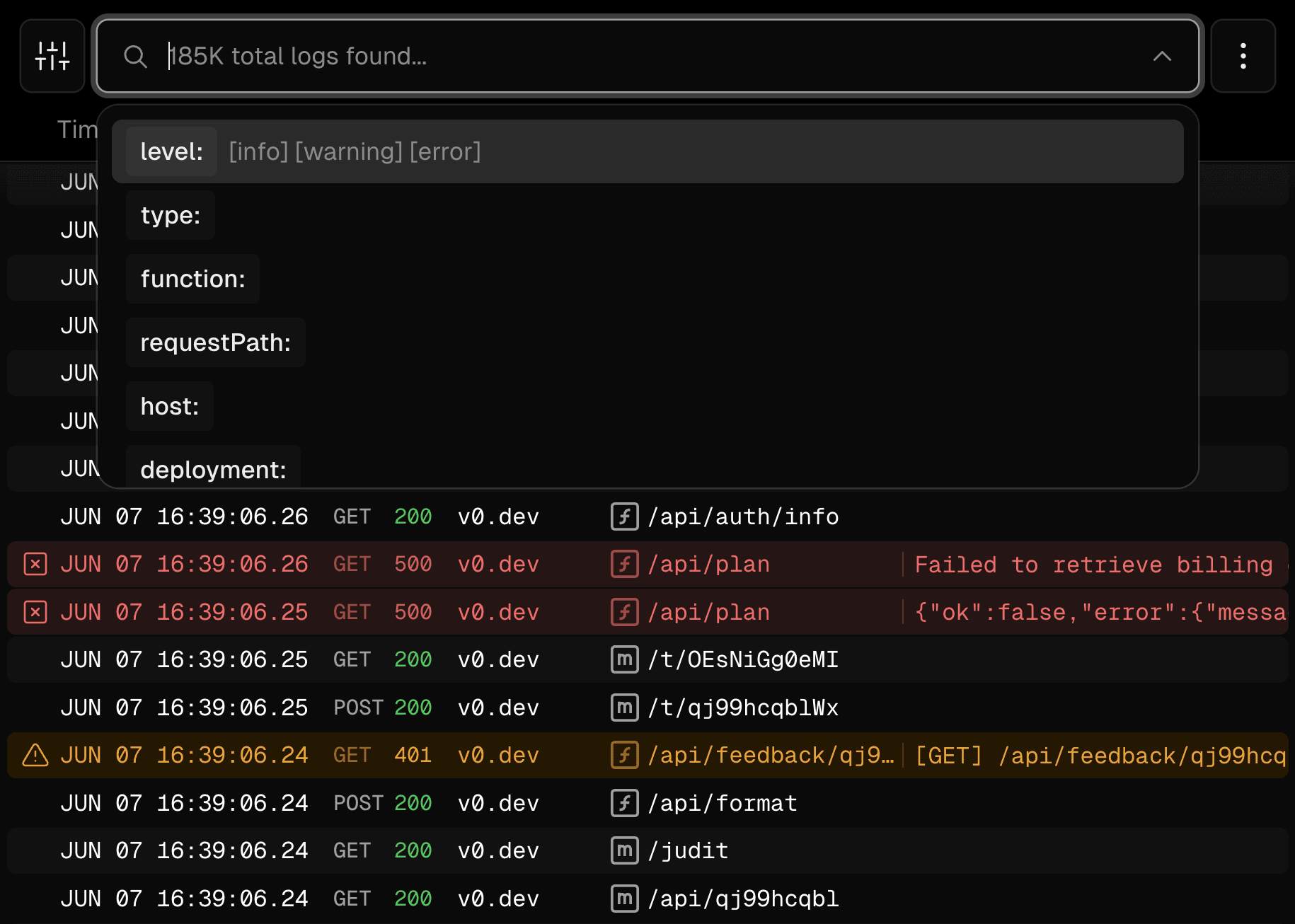

Further, Runtime Logs on Vercel now show detailed request metrics giving you visibility into how long individual fetch requests take. This is often the source of a slow to respond function, and not necessarily the function startup time.

For Next.js users, upgrade to the latest version. If you are using the App Router, there is no configuration required. For the Pages Router, you’ll need to use the experimental.bundlePagesExternal flag.

Link to headingAutomatic Regional Failover

Vercel's Edge Network is resilient to regional outages by automatically rerouting traffic for static assets. We are enhancing this further with support for Vercel Functions.

Vercel Functions with the Node.js runtime can now automatically failover to a new region in the instance of regional downtime. Failover regions are also supported through Vercel Secure Compute, which allows you to create private connections between your databases and other private infrastructure.

Learn more about this feature or about how Vercel helps improve your resiliency.

Link to headingIncreased Logging Limits

Serverless Functions now support increased log lengths, enabling better observability into your application when investigating function logs. Previously, there was a 4KB log limit per function invocation, which could lead to truncated logs when debugging. With this change, it’s now easier to debug errors that include large stack traces.

Learn more about Runtime Logs on Vercel.

Link to headingConclusion

We're continuing to evolve our compute products at Vercel. This is the first of many more improvements planned to help you have low latency, instantly scalable, cost effective compute through Vercel Functions.